Mohamed Mahdy, ISSAP, CISSP, SSCP, explains how he is using Data Processing Units in conjunction with a container orchestration platform and cloud native solutions to ease threat investigations and incident response in AI-driven environments.

Disclaimer: The views and opinions expressed in this article belong solely to the author and do not necessarily reflect those of ISC2.

Disclaimer: The views and opinions expressed in this article belong solely to the author and do not necessarily reflect those of ISC2.

The rise of AI factories and hyperscale environments, powering everything from generative AI to autonomous systems, has stretched the limits of traditional infrastructure. As these facilities began to process exabytes of data and demand near-real-time communication between thousands of GPUs, CPUs struggled to balance application logic with infrastructure tasks like networking, encryption and storage management. That's why Data Processing Units (DPUs) have come to the fore: purpose-built accelerators that offload these "housekeeping" tasks, freeing CPUs and GPUs to focus on what they do best.

DPUs and AI Factories: What’s the Added Value?

DPUs are specialized system-on-chip (SoC) devices designed to handle data-centric operations such as network virtualization, storage processing and security enforcement. By decoupling infrastructure management from computational workloads, we can reduce latency, lower operational costs and enable AI factories to scale horizontally.

Here’s what they do:

- Accelerate Data Movement: They enable high-speed networking (e.g., 400 Gbps Ethernet) and RDMA (Remote Direct Memory Access) for streaming data directly between storage and GPUs, bypassing CPU bottlenecks. In my environment, NVIDIA BlueField DPUs enable GPU Direct Storage; this optimizes my data pipelines for massive AI training jobs.

- Offload Infrastructure Tasks: From encryption to software-defined networking (SDN), DPUs offload resource-intensive operations to hardware, ensuring consistent performance.

- Enable Zero Trust Security: Hardware-rooted trust zones and micro-segmentation protect sensitive datasets and prevent lateral movement in multi-tenant environments.

If it helps, here's an analogy I use. If CPUs are the "airport managers" coordinating passengers (applications), I view DPUs as the security and operations teams: handling baggage (data), traffic control (networking) and security to keep everything running smoothly.

Integrating a Container Orchestration Platform with DPUs: Enabling Scale

Kubernetes has become the de facto orchestrator for containerized AI workloads. However, I’ve found that its native networking stack lacks the depth I need to manage complex traffic (such as non-HTTP protocols) or to enforce granular security policies across distributed environments. This is where I've found cloud native solutions to be invaluable.

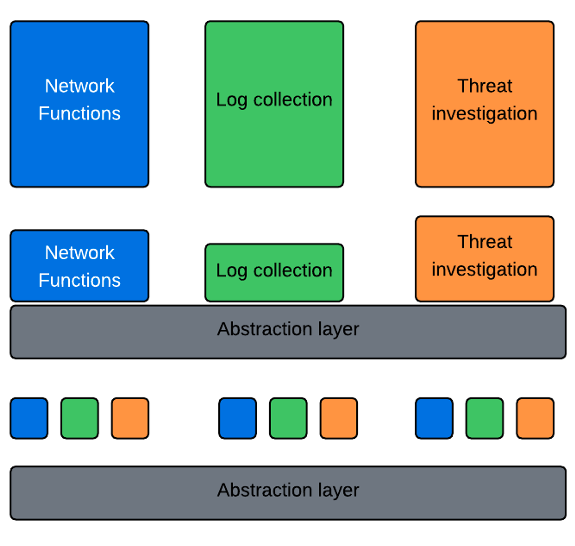

The diagram below shows how deployment changes between classic network functions deployments and modern cloud-native implementations.

Figure 1: Deployment evolution

I previously used this setup, via virtual machines, for a side project. But as expected, it took up more than an acceptable level of resources. Then I came across DPUs; using these in conjunction with cloud-native solutions has enabled me to achieve some specific and helpful advantages:

- Unified Traffic Management: A key advantage is single ingress/egress point for L4-L7 load balancing, API gateway services and DDoS protection. This has eliminated the need for multiple external firewalls.

- Implementation of Zero Trust at the Data Plane: A combination of hardware-accelerated micro-segmentation, mutual TLS (mTLS) and deep packet inspection (DPI) enables me to enforce role-based access controls and prevent unauthorized lateral movement.

- Operational Efficiency: Offloading tasks like SSL/TLS termination and packet processing to DPUs has enabled me to reduce CPU overhead by a measured rate of up to 70%, enabling line-rate performance for security and traffic steering.

Adding Threat Investigation

At this point in my process, I added containerized threat investigation tools on top of our DPU. This was necessary because, while DPUs and cloud-native network functions (CNFs) streamlined my environment, I still needed actionable insights. In my case, I employed open-source tools like TheHive and MISP (Malware Information Sharing Platform).

These are the elements I used, how they meshed together and their expected output for a high level workflow:

- Log Collection: CNF forwards traffic logs (e.g., connection attempts, policy violations) to Fluentd, a unified logging layer.

- Alert Triage: TheHive parses our logs to create investigation cases, tagging anomalies like suspicious IPs or unauthorized API requests.

- Threat Intelligence: MISP correlates these indicators of compromise (IoCs) with global threat feeds, enabling proactive defense.

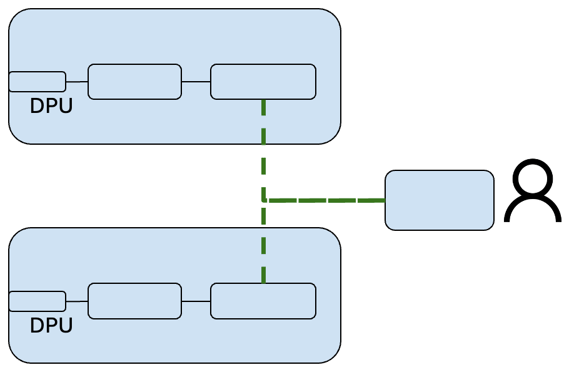

Thereafter, logs flow from DPU-accelerated CNFs to TheHive and MISP for automated threat analysis.

Traffic passing through the hardware DPU is processed through the cloud-native functions providing services from L2-L7. At each DPU, we forward logs to an on-chip log collection system, from where they are fed into our threat intelligence platform for further correlation.

Figure 2: Traffic flow

This deployment changes where log collection and threat intelligence systems are placed: from being centralized at an overlay layer, now they are much closer to the traffic sources at the hardware level.

In addition to the change at the visibility level, moving from physical logging and threat intelligence systems to ‘cloud native’ from virtual machines optimized the compute resources we use, up to 60%. Memory resources are utilized at up to 50%.

There is another benefit: in production environments, such significant compute and memory savings are reflected in power savings and environment-friendly solutions.

An Accelerated Future

AI factories represent a paradigm shift in computing and this warrants – even demands – an equally radical evolution in cybersecurity. By leveraging the inherent capabilities of DPUs and CNFs, we can indeed build security into the fabric of AI infrastructure.

Note that this architecture isn't just about faster networking or efficient orchestration; it's about enabling real-time threat investigation and proactive defense at the scale and speed that AI demands. It is about providing the essential "security ingredient" for protecting sensitive models, massive datasets and critical AI-driven processes.

The future of AI security isn't bolted on; it's accelerated, distributed and intrinsically embedded within the data path. This is how we ensure AI factories are not just powerful, but inherently secure.

Mohamed Mahdy, ISSAP, CISSP, SSCP, has 12 years of experience in IT & cybersecurity. He has held support, consulting and product management roles, with responsibility for implementing, supporting, solutions’ design, architect and technical marketing activities for network and application security solutions.