Naveen Kohli, CISSP, CSSLP, CC

Naveen Kohli, CISSP, CSSLP, CC

Introduction

The integration of Artificial Intelligence (AI) into software testing is revolutionizing Quality Assurance (QA) practices. One of the most cutting-edge applications of the technology is using generative AI models to assist QA teams in the creation and execution of abuse cases.

Abuse cases represent non-standard, often malicious actions a user might take to exploit a software system. Crafting these scenarios is traditionally reliant on human intuition, experience and existing knowledge of emerging threats - a process that's both time-consuming and potentially incomplete.

Generative AI can significantly aid QA teams in abuse case testing. Instead of manually devising each abuse scenario, QA teams can use AI to rapidly generate a wide array of potential abuse cases, speeding up the testing phase in the process.

With AI's ability to generate a myriad of test cases, QA teams can achieve more comprehensive test coverage, ensuring that software can counteract both known and unforeseen malicious actions.

To use Generative AI to generate effective abuse cases, you will have to develop prompts that clearly describe your test scenario. For example, if you want to test the login page of your web application, a question like “Generate abuse cases for login page of a web application” is very open ended. In this case an AI engine will make assumptions about the elements on the web page. Based on these assumptions, AI engines can produce abuse cases that may not be relevant in the context of your application.

Real-world Application

Let’s begin by demonstrating effective use of Generative AI for the generation of abuse cases using the scenario of a real application.

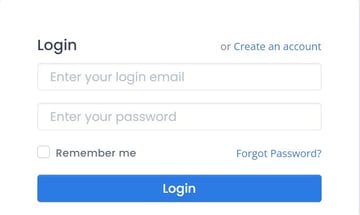

The following view shows the login page of a website.

Following is my chat with ChatGPT (GDT-4) to generate abuse cases for the above login page.

Naveen: You are a security test expert.

Naveen: I have a login view with a field for login email, a field for password, a check box for remember me functionality, a link that allows the creation of a new account and link for password recovery. User clicks on a button to submit login information for authentication. What are the different abuse cases for this view of the web application?

The above prompts generated the following output.

| Threat Surface |

Threat Vector |

Threat Agents |

Preconditions |

Steps |

Postconditions |

Potential Impact |

|---|---|---|---|---|---|---|

|

Login Email & Password Fields |

Brute Force Attack |

External attacker, bot |

No account lockout or rate-limiting mechanism in place |

Use a tool or script to attempt numerous username/password combinations |

Possible unauthorized access |

Data breach, compromised user accounts |

|

|

Credential Stuffing |

External attacker |

Previously leaked usernames/passwords available |

Use previously leaked credentials to gain access |

Unauthorized access if users reused passwords |

Data breach, compromised user accounts |

|

|

SQL Injection |

External attacker |

Lack of input validation, non-parameterized queries |

Inject malicious SQL code into fields to manipulate database queries |

Unauthorized database access or manipulation |

Data breach, data corruption |

|

|

Phishing/Malicious Redirect |

Phisher |

User can be tricked into visiting a fake login page |

Trick user into providing credentials on a fake site |

Credentials stolen |

Unauthorized access, compromised account |

|

Remember Me Checkbox |

Persistent Cookie Theft/Session Hijacking |

External attacker, XSS attacker |

Cookies not set as secure or HTTPOnly |

Exploit vulnerabilities to steal persistent authentication cookie |

Unauthorized access using stolen session |

Data breach, compromised user account |

|

Create New Account Link |

Account Enumeration during Signup |

External attacker |

Registration reveals if an email is already registered |

Attempt to register known emails to check for account existence |

Attacker identifies valid accounts |

Targeted attacks, phishing |

|

Password Recovery Link |

Account Enumeration via Password Recovery |

External attacker |

Password recovery shows different responses for "account exists" and "account doesn't exist" |

Attempt to register known emails to check for account existence |

Attacker identifies valid accounts |

Targeted attacks, phishing |

|

|

Password Reset Token Hijacking |

Email attacker, MITM attacker |

Insecure transport, compromised email, tokens not expiring swiftly |

Access the reset token sent via email |

Unauthorized password change |

Compromised user account |

|

Login Button (Submission) |

DoS through Rapid Submissions |

Bot, external attacker |

No CAPTCHA or rate-limiting during login attempts |

Repeatedly submit login data rapidly to exhaust system resources |

System slowdown, potential failure |

Service disruption |

Remember, while these are a set of common abuse cases, real-world applications might have more specifics depending on their context, architecture, and additional features. It's also essential to combine these abuse cases with other security considerations like HTTPS, multifactor authentication, and monitoring/logging for a comprehensive security strategy.

As you can see, Generative AI has provided a very good starting point for the QA team to investigate further and add value. The team can review this output in the context of the application. The same suggestions have been provided by ChatGPT as well. It is not good practice to blindly trust the output generated by any AI system in isolation. It is a known issue with Generative AI that, depending on the quality and quantity of the training set, it can hallucinate and generate content that may not be valid in the context of the application. A Russian proverb says, “Trust but Verify”. The same applies here as well. Do verify suggestions provided by Generative AI.

I will mention it one more time, good prompts that describe the test scenario is the key to generating relevant abuse cases.

Conclusions

Generative AI stands poised to revolutionize the way QA teams approach abuse case testing. By generating a broader spectrum of potential threats and vulnerabilities, this technology enhances software security and equips QA teams with a dynamic toolset, making software not just functional but robustly secure against the continually changing threat landscape.