Responsible AI is “the younger cousin of privacy and security”,

Twitter’s former head of AI ethics told ISC2 Congress in a keynote

session in Nashville, Tennessee this week, as she laid out a vision for

how society can audit algorithms and ensure accountability in the

post-ChatGPT era.

Dr Rumman Chowdhury, now chief scientist at Parity Consulting, told the audience the conversations around AI today look similar to the conversations she was having around AI in 2017, when she was heading up Accenture’s responsible AI practice.

“Will AI come alive and blow up the planet? Will I have my job because of AI.” At the time, she said, “A lot of us worked to actually shift that narrative towards the things that matter to us in our everyday lives.”

Generative AI changing the conversation

While Chowdhury noted that Generative AI had initiated a whole new wave of thinking about AI, the questions were essentially the same. She added that what is different is “the accessibility of these systems” and the breadth and depth of the data used to train them.

At the same time, there had been advances in thinking about governance, and “A lot of companies have very established teams even have established governance practices… So we're smarter about the impacts of AI systems.”

Policy and regulatory efforts have progressed, with the likes of the EU’s Digital Services and upcoming AI Act, and the NIST’s AI Risk Management Framework. The DSA, for instance, said that companies must prove their algorithmic systems don’t violate human rights, or introduce gender-based violence.

While government interest and regulation is necessary, Chowdhury said, this should not be at the expense of collaboration and open access and the stifling of innovation. “There's a scary idea of some sort of malicious agent that's getting this code and they're doing terrible things. And bad actors exist. … But it ignores all the good things that happen when code is shared freely, data is shared freely.”

But, Chowdhury continued, while there was a “robust civil society” around AI, with people who are adept in legal and societal impacts, “What's missing in our little world is the cultivation of independent third parties that are technical in nature.”

The road to legislating AI

According to Chowdhury, legislation could open the path for algorithmic evaluation, while APIs made it easier to interact with models. “My passion, and what I'm working on now, is creating this robust, individual, independent third-party ecosystem to provide external review and accountability.”

It’s not entirely clear what algorithmic auditing should look like, while appropriate certifications and standards had yet to emerge, she said. “Anybody can decide to go on LinkedIn and say I'm an auditor, there are plenty of people who've popped up training on algorithmic auditing. But we don't actually have a way of certifying who is an auditor.”

Perhaps more concerning is uncertainty around the legal framework for testing models or expositing vulnerabilities.

“Because let's say you do find a problem with a model, where do you go?” she said. “Who is the authoritative body to decide whether or not this is truly a vulnerability? What is the company's responsibility of responding to that vulnerability that you found? Again, we have none of these institutions at the moment.”

Chowdhury highlighted that she had told a congressional hearing last

June that “We actually need support for AI model access to enable

independent research and auditing capabilities…people actually do this

work, but they do it in a very ad hoc fashion.”

And, crucially, she said, “and I learned this from the security community,” there is a need for “legal protections for independent red teaming and ethical hacking.”

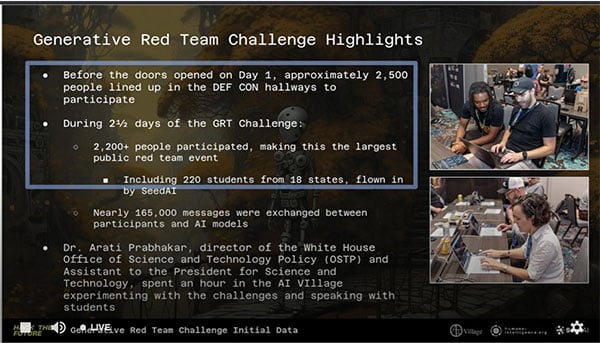

Chowdhury said her non-profit organization, Humane Intelligence, had sponsored a competition at DefCon to uncover harms in AI models, and was working to increase alternative pathways into this world. “The point we are making is that you don't actually have to have a PhD in order to audit an algorithm.”

The organization has a public policy paper, in conjunction with NIST, scheduled for early 2024, along with a series of research papers. It also aims to launch an open-source evaluation platform and offer for-profit services on red-teaming and model testing.

Ultimately, Chowdhury said, the aim was to build an open access community “where everybody can be part of evaluating and auditing AI models. This is important. And it's critically important to do this in a way that still protects security and privacy.”

- ISC2 Security Congress is taking place until October 27 2023 in Nashville, TN and virtually. More information and on-demand registration can be found here.

- ISC2 SECURE Washington, DC takes place in-person on December 1, 2023 at the Ronald Reagan Building and International Trade Center. The agenda and registration details are here.

- ISC2 SECURE Asia Pacific takes place in-person on December 6-7, 2023 at the Marina Bay Sands Convention Centre in Singapore. Find out more and register here.