Take Your TPRM Program to the Next Level

An AuditBoard survey of over 800 risk and compliance professionals found nearly 37% rated their business’s third-party risk program maturity as either nonexistent or simply reactive. This report explores key third-party risk management principles, as well as practical tips for building a successful TPRM program.

By Adam Kohnke, CISSP

Amazon Web Services (AWS) grew 36.5% year over year in Q1 2022, according to business news outlet CNBC. With more than a million active users spread across 190 countries and a service portfolio offering 200 unique products, AWS is a market powerhouse in the cloud hosting space. That also makes it a prime target for attackers and advanced persistent threats.

I have experience in developing techniques to simulate the potential for abuse against common AWS services. Please keep in mind this article is not meant to serve as direct, enterprise-specific security advice or guidance. It can, however, facilitate improved protections for such a pervasive cloud computing platform.

Planning and Scoping AWS Penetration Tests

AWS requires announcement of penetration testing for most of its services prior to any activity. As such, pre-announcement should be part of all planning efforts. However, it is worth noting that the last time I checked in late 2021, AWS EC2, RDS, CloudFront, Aurora, API Gateway, Lambda, Lightsail resources and Elastic Beanstalk do not require testing announcements in advance.

To help scope the test and drive efficiency, other early planning considerations for an AWS penetration test should include a review of AWS billing statements to understand which resources exist, which AWS accounts those resources exist in, and how many instances of each resource exist within in-scope accounts.

From there, establish partnerships with internal stakeholders and in-scope vendors to fully define the testing expectations, detailed target lists, testing timelines and escalation paths for pre-existing indicators of security breaches. Also prepare for possible disruption caused by testing procedures and obtain formal permission to conduct the testing (the proverbial “Get out of Jail” card).

Information Gathering, Enumerating and Footprinting AWS Accounts

Let's assume that front-end web resources have been deployed by the "victim" organization connecting to its AWS infrastructure. In this scenario, an AWS access key was leaked, inappropriately shared or discovered by would-be attackers. User enumeration may be achieved through the use of tools such as theHarvester or Hunter.io, allowing discovery of email addresses associating to the victim organization.

The resulting email addresses can be paired with man-in-the-middle frameworks, such as Evilginx2, to perform low-cost and sophisticated phishing campaigns leading to further exposure and exploitation of AWS user credentials.

User enumeration and exploitation tools should be explored by internal pen test teams to assess the organizational user level of exposure to social engineering and multifactor bypass techniques. Evilginx2 excels in achieving the latter.

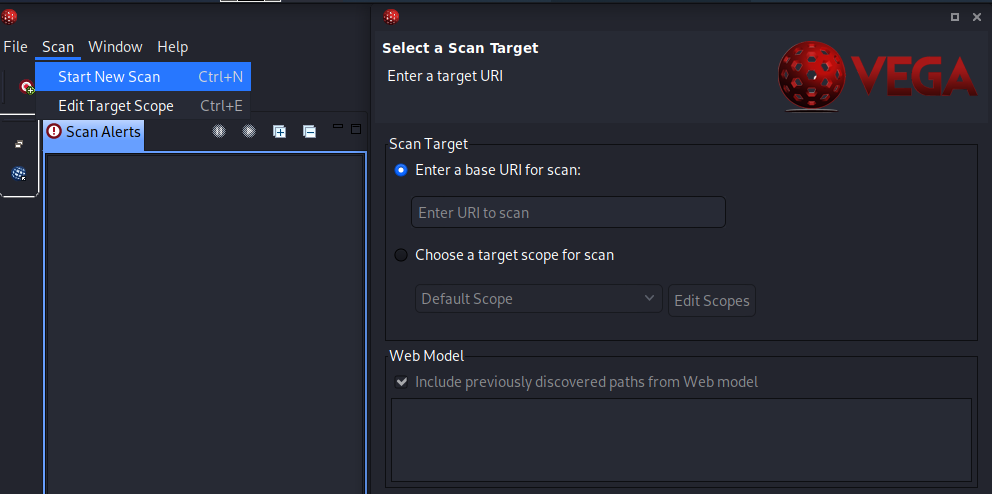

Scanning for Vulnerabilities with Vega

To obtain a full account of the organization's attack surface, it is beneficial to perform vulnerability scanning regardless of whether an AWS key has been provided for testing or not.

Subgraph's Vega vulnerability scanner is an open-source scanning tool that covers a variety of needs for web application and cloud penetration testing. Vega can perform common and specialized vulnerability tests such as SQL injection, API testing, cross-site scripting and assessing adequacy of Transport Layer Security (TLS) configuration. It includes an intercepting proxy for more tactical-based network traffic inspections.

Vega does require that Java8 be installed and then set as the current version, so keep this in mind during installation and use. Vega scan results should be jointly assessed with internal stakeholders and the pen test team to filter out false positives and determine which vulnerabilities deserve threat modeling effort and remediation prioritization.

Figure 1. Clicking the “Start a New Scan” button initiates the scan process. Then, on the right-hand side, choose a target URI or website address to probe.

Figure 2. Website mappings from the Vega crawler are displayed on the left, opposite an alert summary showing details and severity of discovered vulnerabilities.

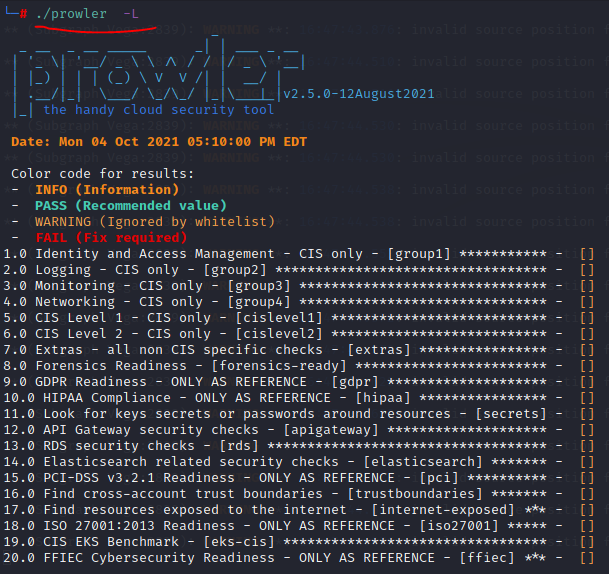

Audit Using Prowler

Pivoting into the target AWS account using the supplied AWS key, internal pen test teams may perform a more thorough analysis of potential targets and resources within the AWS account using Prowler. A command-line security auditing tool, Prowler performs approximately 150 individual checks against recognized security and privacy standards like Center for Internet Security (CIS), SOC 2 and HIPAA.

Prowler leverages the AWS command line interface (CLI) under the covers to perform its checks, so installation of the AWS CLI is a prerequisite. Invoking Prowler requires some caution. Using the base ‘./prowler’ command will run all 150 checks for both controls that are adequately secured and those that fail. That produces a lot of noise, wasting precious test and analysis time. Internal penetration testers and malicious actors are interested in where the vulnerable resources and control failures are, so a better use of time is focusing on specific checks of interest to meet engagement objectives—and only the checks that produce failures. Use of the -q switch limits results to only failed checks, and the -g switch focuses on specific groups of controls to assess allowing Prowler assessments to focus strictly on risky AWS resources.

Figure 3. The groupings of security checks Prowler can do. Use -g to target groups of interest such as ‘ ./prowler -g group1’ to perform identity and access management checks.

Figure 4. Using the -q switch to focus testing on only failed test results paired with -g to focus on specific testing groups allows for a quick, efficient and effective use of Prowler. Tests Extra71-73 are displayed but not actually assessed by the tool.

Enumerating IAM Users with Pacu

Developed by Rhino Security, Pacu is a command-line, Python-based exploitation framework that focuses specifically on providing an end-to-end solution for internal security teams to simulate an AWS cyber breach across the entire cyber kill chain.

Similar to Prowler, Pacu leverages compromised AWS credentials to perform its assessments and provides the penetration testing team (or would-be attackers) numerous modules to execute a variety of attacks on AWS resources.

Pacu is also excellent at evading AWS GuardDuty by changing its user agent data upon starting the tool. Beginning with identity and access management (IAM), Pacu’s first beneficial use is to enumerate the current privileges offered by the provided (leaked) credentials, fully enumerate all other IAM users or roles within the account and see if current access can be escalated to an administrative level.

In Figure 5, the ‘run iam__enum_permissions –all-users –all-roles’ command is issued performing full enumeration of AWS IAM users and roles, producing results in a JSON format. A follow-up command to enumerate all resources in the account is ‘run aws__enum_account.’ Pacu also provides the ability to enumerate spend reports, specific services like EC2 or S3, and download EC2 user metadata for analysis to find additional or alternate avenues of access compromise.

Figure 5. Details on IAM user accounts, their group memberships and permissions levels are captured by Pacu and saved locally. This data may be useful for social engineering or other credential-based attacks like password brute forcing if multifactor authentication is not enabled or weak password policies are enforced.

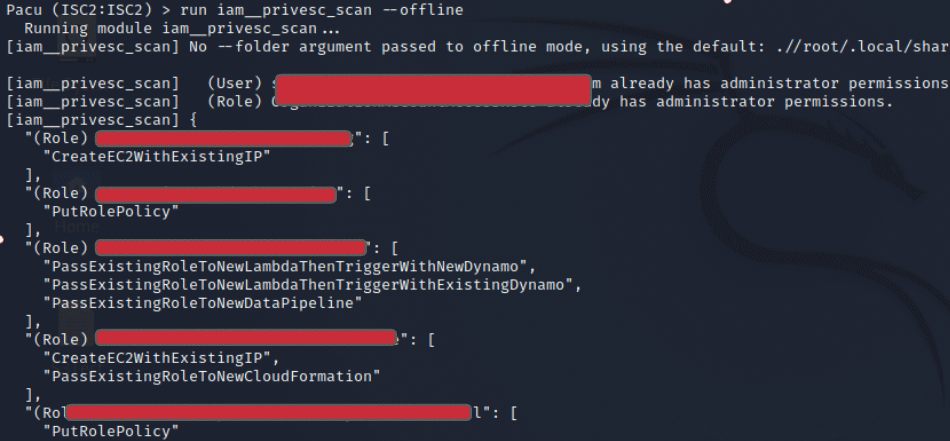

Attempting Privilege Escalation and Establishing Persistence

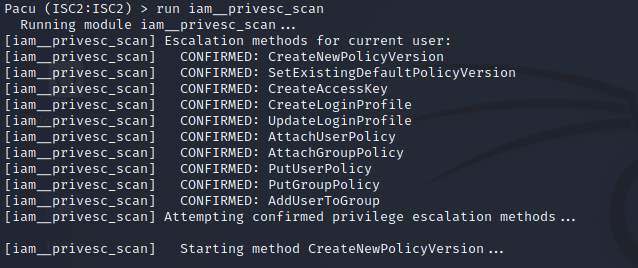

Using the ‘run iam__privsec_scan –offline’ command, Pacu will produce a report showing the privilege escalation paths available within the account for all IAM users and roles. Removing the –offline switch will run the privilege escalation module for the current user account.

The first command has tremendous value and should be reviewed with internal stakeholders even if the current user fails to escalate privileges. Why? Because it may highlight instances where other IAM user accounts or roles have unnecessary access permissions that should be removed.

Attempting privilege escalation will present all available options to the penetration testing team with prompts provided as necessary. Once escalated privileges are achieved the module stops.

Persistence may be achieved in several ways depending on how privileged access was obtained. One method is to create a primary or secondary IAM key under another existing IAM user in the account if allowed.

Figure 6. Pacu’s Privilege Escalation scan details existing IAM users or roles that have an available path for access abuse via escalation. Any results of this command should be investigated with internal stakeholders to determine risk and required mitigation.

Figure 7. Removing the –offline switch from Pacu’s Privilege Escalation scan command will turn the command toward assessing and executing a privilege escalation scan against the current user credentials being used within the tool.

Enumerating S3 Buckets and Downloading Publicly Accessible Content

Pacu also provides a ‘run s3__download_bucket’ command that allows it to enumerate and download S3 bucket content.

If the previous IAM privilege escalation attack was successful, it is likely admin privileges were achieved and it’s game over already. An online web tool called GrayHatWarfare provides the external discovery of publicly accessible S3 buckets that may allow attackers to access the contents remotely to either inappropriately access or modify data depending on bucket permissions. GrayHatWare bucket searches use keyword strings to locate publicly accessible buckets. Simply enter a company name or common terminology seen on company webpages or social media sites and you may produce buckets of interest.

Using Pacu or GrayHatWarfare for routine S3 reviews ensures that inappropriate public access to buckets is identified and quickly remediated where necessary.

Enumerating and Abusing Lambda Functions

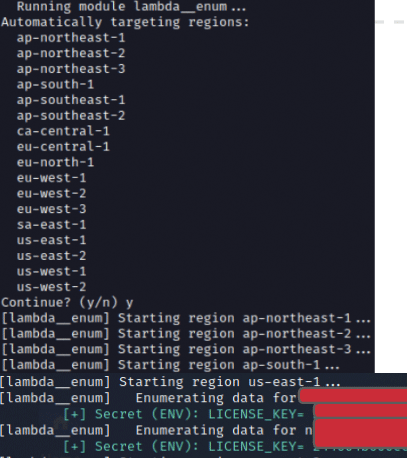

Pacu’s Lambda enumeration module is useful for revealing upfront whether secrets (license keys, access keys, etc.) are being stored within environment variables, which may grant escalated access to the current AWS environment or to external applications and systems.

If the enumeration command doesn’t reveal secrets upfront, Lambda is architected to be quite promiscuous with what it is willing to share through the download and inspection of stored code associated to Lambda functions.

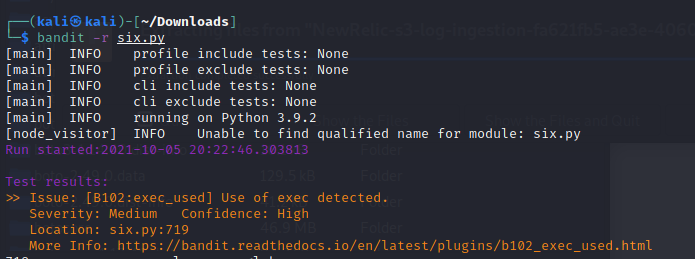

Analyzing with Bandit

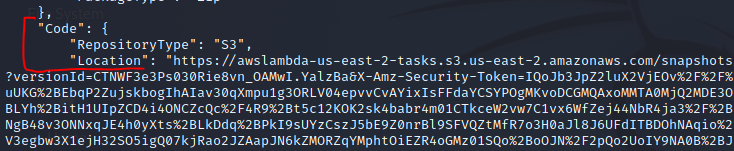

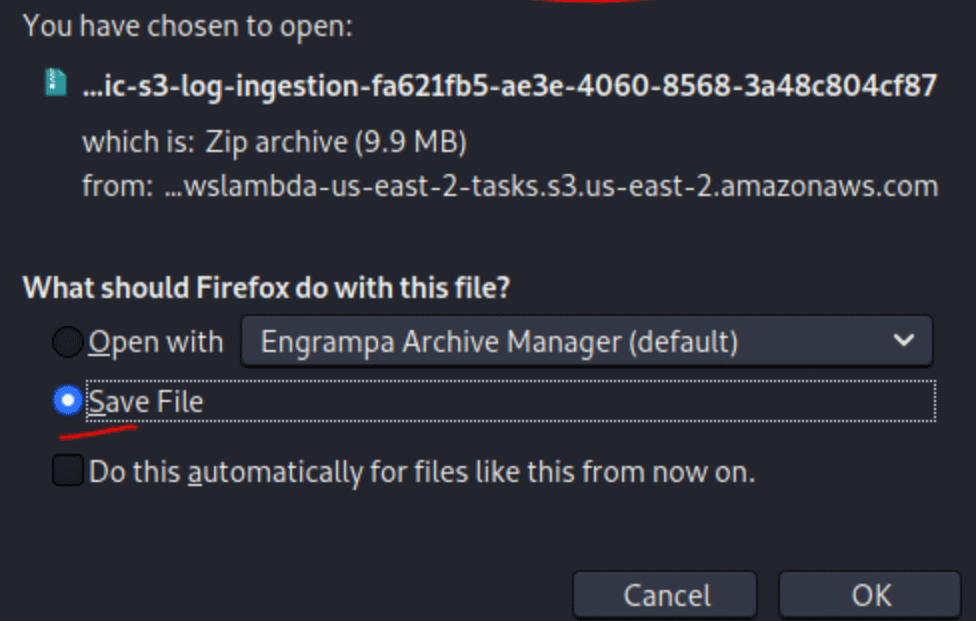

Using Bandit, static analysis of Python code may reveal exploitable vulnerabilities that can be leveraged for lateral movement, data exfiltration or privilege escalation. Within Pacu, issuing the ‘aws lambda get-function –function-name <name> –profile <pacu profile> –region <function region>’ will provide the download URL for the functions code.

Once in the directory containing the extracted Lambda code, Bandit is executed using the command ‘bandit -r <vulnerable function name.py>’ to perform static application security testing (SAST) or static code scanning. Review potential items of interest with internal stakeholders as SAST scanning tools are prone to a high volume of false positives. Further inspection of Lambda event source mappings and resource policies using the AWS CLI may reveal predictable routines or execution schedules that can be exploited for expanded environment access.

Figure 8. Pacu’s Lambda Enumeration module is useful for quantifying where functions exist and if unsafe practices such as storing access credentials or license keys in environment variables within functions is occurring. Two license keys were exposed in the above example.

Figure 9. Enumerated Lambda functions can be viewed in more detail and have their stored code downloaded for offline static analysis using Bandit. Simply copy and paste the Location URL for the code in a web browser, unzip it and scan it with Bandit.

Figure 10. Screenshot of the prompt to download code associated to enumerated Lambda functions.

Figure 11. Bandit performing static analysis of stored code in the Lambda function. A medium issue is flagged along with reference documentation describing the vulnerability detected.

Enumerating, Scanning and Brute Forcing RDS Databases

Pacu’s ‘rds__explore_snapshots’ command allows for the automated enumeration and copying of RDS database instance snapshots, satisfying numerous data exfiltration needs for attackers, such as every region where a database resides. The AWS CLI and the ‘aws rds describe-db-instances –region <enter region name>’ command, paired with NMAP scans, can be used to further enumerate RDS instances, service versions and open ports that further identify potential vulnerabilities while enabling exploitation of the database. Based upon the results of enumeration, information including the master username, encryption status of the RDS instance and other useful details may be revealed to support password brute forcing or other attacks.

Just a Fraction of Available Tools and Techniques

What I’ve outlined is a basic approach to assessing a small fraction of what’s available within AWS using simple techniques that may be used to exploit an AWS environment leveraging leaked access keys. Routine assessments from both an internal and external penetration testing angle may assist in the early identification and remediation of exploitable vulnerabilities.

To achieve positive security outcomes, security teams should focus their time and resources on adopting offensive security techniques and tools to mimic their adversaries. They should seek to understand AWS weak points from a holistic control perspective and communicate relevant risks to internal stakeholders for timely remediation. A special thanks to Rhino Security, Packt publishing, Subgraph and all those wonderful white hats out there helping us security folks get smarter through collaboration.

Adam Kohnke, CISSP, has more than 12 years of experience in IT operations, incident management, IT audit and information security management. A version of this article appeared in the January/February issue of InfoSecurity Professional magazine.

.jpg?h=420&iar=0&w=420)